- KUBERNETES INSTALL APACHE SPARK ON KUBERNETES HOW TO

- KUBERNETES INSTALL APACHE SPARK ON KUBERNETES DRIVER

- KUBERNETES INSTALL APACHE SPARK ON KUBERNETES SERIES

- KUBERNETES INSTALL APACHE SPARK ON KUBERNETES FREE

They can be found in the kubernetes/dockerfiles/ directory. Spark (starting with version 2.3) ships with Dockerfiles that can be used to build different Spark Docker images (and customize them to match an individual application’s needs) to use with a Kubernetes backend.

KUBERNETES INSTALL APACHE SPARK ON KUBERNETES HOW TO

This section explains how to build an "official" Spark Docker image and how to run a basic Spark application with it. You need a Docker image that embeds a Spark distribution.

KUBERNETES INSTALL APACHE SPARK ON KUBERNETES DRIVER

To be consistent, we will ensure that the same scheduler is used for driver and executor pods. By default, the workload is scheduled with the default kube-scheduler. For two tasks, it decides whose priority is higher by comparing task.priorit圜lassName, task.createTime, and task.id in order.Įnable Volcano scheduling in your workloadįor your workload to be scheduled by Volcano, you just need to set schedulerName: volcano in your pod's spec (or batchScheduler: volcano in the SparkApplication's spec if you use the Spark Operator). For two jobs, it decides whose priority is higher by comparing 圜lassName. Note that job preemption in Volcano relies on the priority plugin that compares the priorities of two jobs or tasks. This article explains in more detail the reasons for using Volcano. If for any reason it is not possible to deploy all the containers in a gang, Volcano will not schedule that gang. The main reason is that Volcano allows "group scheduling" or "gang scheduling": while the default scheduler of Kubernetes schedules containers one by one, Volcano ensures that a gang of related containers (here, the Spark driver and its executors) can be scheduled at the same time.

KUBERNETES INSTALL APACHE SPARK ON KUBERNETES FREE

They'll just have to wait until sufficient resources are free to be scheduled.įor our experiments, we will use Volcano which is a batch scheduler for Kubernetes, well-suited for scheduling Spark applications pods with a better efficiency than the default kube-scheduler. Pods with other priorities will be placed in the scheduling queue ahead of lower-priority pods, but they cannot preempt other pods. Here, only the Priorit圜lass "rush" is allowed to preempt lower-priority pods. Below an example RBAC setup that creates a driver service account named driver-sa in the namespace spark-jobs, with a RBAC role binding giving the service account the needed permissions.Įnter fullscreen mode Exit fullscreen mode Thus, Spark driver pods need a Kubernetes service account in the pod's namespace that has permissions to create, get, list, and delete executor pods.

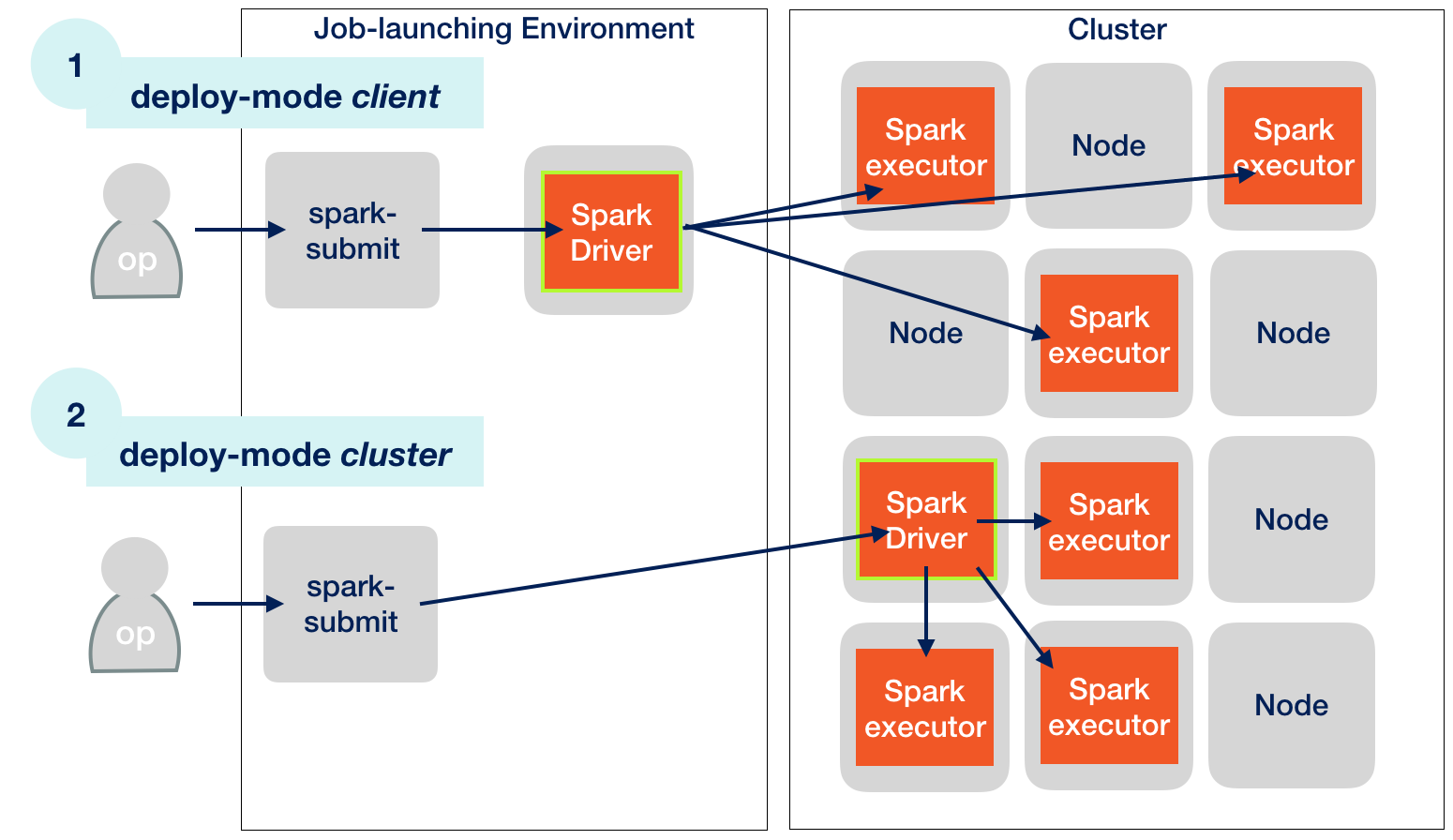

Once connected, the SparkContext acquires executors on nodes in the cluster, which are the processes that run computations and store data for your application. Remember, Spark applications run as independent sets of processes on a cluster, coordinated by the SparkContext object in your main program, called the driver.

KUBERNETES INSTALL APACHE SPARK ON KUBERNETES SERIES

This series of 3 articles tells the story of my experiments with both methods, and how I launch Spark applications from Python code. Use the Spark Operator, proposed and maintained by Google, which is still in beta version (and always will be). Kubernetes support was still flagged as experimental until very recently, but as per SPARK-33005 Kubernetes GA Preparation, Spark on Kubernetes is now fully supported and production ready! 🎊 Use "native" Spark's Kubernetes capabilities: Spark can run on clusters managed by Kubernetes since Spark 2.3. In the meantime, the Kingdom of Kubernetes has risen and spread widely.Īnd when it comes to run Spark on Kubernetes, you now have two choices:

Until not long ago, the way to go to run Spark on a cluster was either with Spark's own standalone cluster manager, Mesos or YARN.

0 kommentar(er)

0 kommentar(er)